Motion capturing is an indispensible technique in today’s film and video game productions. In this process, movements of a real object, be it a person, animal or machine, are captured in real time and saved on a computer as a three dimensional motion sequence.

Text& Photos:by Steffen Ringkamp;

With classical motion capturing (“mocap”) the actor or performer wears a suit with markers and sensors and is filmed by multiple special cameras. The movements of the performer and with this the markers’ changes in location in reference to position and orientation are recorded and imported into a 3D programme. Here the data is transferred onto a type of skeleton and this is combined with the object to be animated. In contrast to the technology previously used in animation studios the interpolation between key frames makes the animation a lot more flowing and natural.

In the early days only the body of the performer could be recorded but the MoCap technicians have now extended this to the so-called performance capture. The sensors are also placed on the face in order to achieve realistic facial expressions for the character to be animated. The most famous example of this procedure is Gollum from “The Lord of the Rings” films. The gestures and facial expressions of Andy Serkis were transferred so perfectly that a credible character was created in the film from completely computer animated means.

The video games developer Rockstar Games took things a step further with their game “L.A. Noire” which was released in 2011. This detective game utilised the so-called MotionScan method where the actor’s face was recorded with a total of 32 video cameras covering every angle around the actor and were also installed above and below the actor’s field of vision. The vast amount of data captured was then transferred and displayed on a 3D model in real time. This set-up enabled incredibly realistic facial features so that later in the game the facial gestures of the characters could be used to tell who was lying and who was telling the truth.

In the meantime motion capturing can be found in all areas, however classical MoCap with sensor suits comes with a big catch: the cost. Acquisition of hardware, meaning specialist cameras and sensor suits but also renting premises in one of the countless MoCap studios is expensive and cannot currently be afforded by small companies with limited budgets. The introduction of Microsoft’s motion sensing input device “Kinect” was therefore an interesting development.

The name Kinect comes from the terms “kinetic” and “connect”. The flat black housing has space for two infra-red sensors, a 640 x480 pixel camera and calculates approx. 30 images per second. Released in November 2010, approx. 2.5 million units were sold worldwide in the first month. The camera should have solely been used as a new input medium for Xbox games but shortly after being introduced the first driver hacks appeared which extended the use of the Kinect to include some interesting possibilities. Microsoft did initially threaten not to tolerate such modifications but now the software giant has come to see these hacks as inspiring. So now the imaginations of programmers know no bounds. A team of Chinese students caused a sensation when they used the Kinect’s camera for motion capturing and came up with some interesting results.

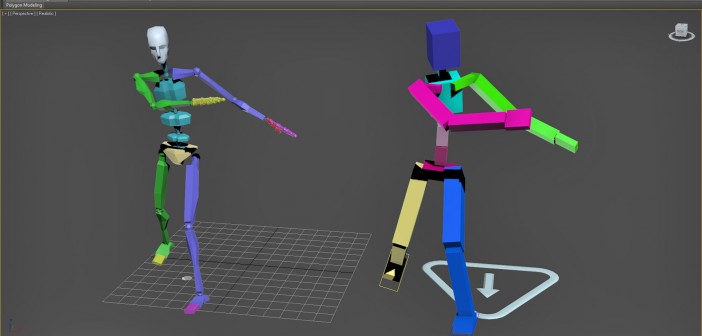

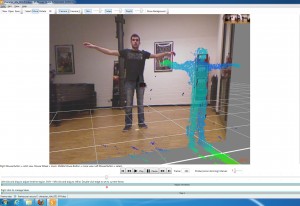

The Russian company iPiSoft brought out the first useful programme which allows movements to be captured and transferred using the Kinect. With the assistance of both infra-red sensors depth images can be generated which can then used as a template to transfer movements onto a virtual skeleton. The results of this marker-less MoCap are astoundingly good and can be easily transferred to today’s popular 3D programme s such as 3Ds Max and Maya. As such a set-up of two Kinect cameras is really flexible in terms of room size and structure, this form of MoCap is now used in virtually all German SAE Institutes and is a fixed part of teaching in the new curriculum. This gives students the opportunity to imbed intricate animations in their final projects.

A breakthrough in the area of gesture recognition is expected at the end of 2012/beginning of 2013: Leap (http://www.leapmotion.com), a start-up company from San Francisco plans to introduce their 3D gesture controller Leap Motion onto the market. This is small box which is connected to a PC by USB or Bluetooth and recognises movements exactly up to 1/100 mm. This makes Leap Motion some 100 times more accurate than motion capture devices used up until now. From a MoCap technical point of view this could represent a real revolution. It is initially, however, only planned to be able to operate software and programs with this box, but this is similar to the initial plans for Microsoft’s Kinect and the inventiveness of capable programmes quickly showed other possibilities for its use which had previously not been thought of.

The beginning ofmotion capturing:

“Mike the talking head” was a breakthrough in the field of facial motion capture in 1988. The head of Mike Gribble was scanned and digitised and could be used to visualise spoken words.