This article presents one of the latest interactive audiovisual projects by Andrea Santini (UBIKteatro / SAE London / SAE Ljubljana), a system enabling musical interplay within a responsive audio-visual environment.

Text and Photos: Andrea Santini; additional Photos: Fenu, Toolkit Festival

Motion capture and optical ‘tracking’ technology (whereby a computer can track the position and motion of an object or individual in space and determine its spatial coordinates as well as its size, acceleration and other parameters) has been widely employed in the movie and gaming industry (as well as in military and industrial applications) since the 1990s.

The diffusion of increasingly cheaper hardware and DIY solutions, along with software developments and the growth of online communities sharing technical information, has led to exciting applications of motion tracking to interactive digital arts and performance practices, often in combination with video projections. Over the past decade theatre companies such as Ex Machina (Canada) or Troika Ranch (US/Germany) and contemporary dance groups such as Chunky Move (Australia) have been leading the way and successfully integrating these technologies in their shows. Reactable (http://www.reactable.com), an interactive music system with a tangible user interface, is yet another brilliant example of how tracking technology and projections can be employed to significantly improve and expand the creative ‘dialogue’ between humans and electronic machines.

Finally the introduction of cheap and powerful 3D motion sensing input devices such as Microsoft Kinect into the consumer market for video-games since 2010 has further expanded the range of possibilities and ease of set-ups in the context of digital interactive arts.

OSCILLA is, in some ways, similar to Reactable in that it is primarily an interactive musical environment controlled by the position of objects in space. Reactable however, uses a fixed size surface and set of objects carrying identifier patterns that are recognised by the system to control a range of musical parameters (volume, oscillator frequen-cies, rhythm etc.).

My idea was to create a simple site-specific system where the interactive area was scalable, so that it could be enlarged as required to accommodate for objects or even people to interact (see Figures 1 & 2), and that would not depend on custom-built objects to trigger its parameters. Instead it would be capable of tracking sets of standard objects or human bodies in two-dimensional space without complicated mappings or pattern-recognition systems. This of course meant introducing substantial limitations to the range of parameters that could be controlled, since the computer would only obtain X-Y spatial coordinates from each interacting element detected by an infrared camera system or Kinect. I felt this was enough for a basic musical interaction that explored the essential principles of sound and music-making since it could provide control over two fundamental parameters: the pitch (X) and the amplitude (Y) of a variable number of sine wave oscillators.

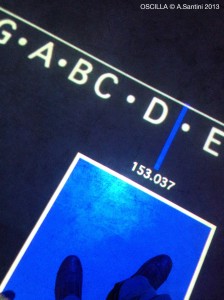

I created a visual ‘mask’ to be projected over the ‘tracked’ area so that users would see standard musical notation along the horizontal axis, as well as the individual position and frequency (in Hertz) of each element being detected. The vertical axis values were assigned to amplitude control. As simple and limited as this may sound for today’s elaborate standards, the platform allowed for a very intuitive exploration of extremely sophisticated harmonic structures within and beyond standard tonal systems. It also became immediately obvious that the system could provide a very effective way to explore and illustrate other fascinating principles of sound and music such as beat frequencies, microtonal intervals, the mathematics of musical ratios and, by changing the oscillators wave shape and extending the frequency range as relevant, the continuum between pulse and pitch.

I created a visual ‘mask’ to be projected over the ‘tracked’ area so that users would see standard musical notation along the horizontal axis, as well as the individual position and frequency (in Hertz) of each element being detected. The vertical axis values were assigned to amplitude control. As simple and limited as this may sound for today’s elaborate standards, the platform allowed for a very intuitive exploration of extremely sophisticated harmonic structures within and beyond standard tonal systems. It also became immediately obvious that the system could provide a very effective way to explore and illustrate other fascinating principles of sound and music such as beat frequencies, microtonal intervals, the mathematics of musical ratios and, by changing the oscillators wave shape and extending the frequency range as relevant, the continuum between pulse and pitch.

I then decided to integrate a visual counterpart that could plot the relationship between the sounds being generated and partly due to my sound engineering background, I opted for a ‘technical’ visualisation rather then a purely visual-FX oriented one. I implemented a two channel (XY) oscilloscope that, when fed sine wave signals, would plot those fascinating patterns known as Lissajous curves. These patterns are a visual representation of the amplitude and pitch relationships between sounds appearing at the X and Y inputs (complex harmonic motion), just like in a phase meter on a mixing console. For example a perfect unison (1:1 ratio) of two pitches at similar amplitudes creates a nearly stationary elliptical shape, an octave (1:2 ratio) results in a figure-8 shape and more elaborate patterns begin to emerge with more complicated intervals (fifth 3:2, major third 5:4) etc.

I then decided to integrate a visual counterpart that could plot the relationship between the sounds being generated and partly due to my sound engineering background, I opted for a ‘technical’ visualisation rather then a purely visual-FX oriented one. I implemented a two channel (XY) oscilloscope that, when fed sine wave signals, would plot those fascinating patterns known as Lissajous curves. These patterns are a visual representation of the amplitude and pitch relationships between sounds appearing at the X and Y inputs (complex harmonic motion), just like in a phase meter on a mixing console. For example a perfect unison (1:1 ratio) of two pitches at similar amplitudes creates a nearly stationary elliptical shape, an octave (1:2 ratio) results in a figure-8 shape and more elaborate patterns begin to emerge with more complicated intervals (fifth 3:2, major third 5:4) etc.

The trigonometric equations governing Lissajous figures tend to produce the most interesting and structured patterns for consonant musical ratios such as unison, third, fifth, octave etcetera, so the user experience and interaction is further enriched by discovering harmonic consonance both in the auditory and visual domains.

Finally I wanted the system to be able to interact with the acoustics of each installation space and I implemented a flexible output routing system so that each element and oscillator could be mixed internally to a stereo feed or, more interestingly, discretely fed to separate loudspeakers that could be arranged in the venue to achieve interesting effects based on the acoustic combination of distinct pitches in space. A good example of the potential of such arrangement was demonstrated in one of OSCILLA’s first appearances (Kernel Festival, Milan, IT, 2011) where the interactive surface was projected onto the marble altar of a small church and four loudspeakers were placed in a choir-like semicircular fashion in the apse (Figures 3-5).

Finally I wanted the system to be able to interact with the acoustics of each installation space and I implemented a flexible output routing system so that each element and oscillator could be mixed internally to a stereo feed or, more interestingly, discretely fed to separate loudspeakers that could be arranged in the venue to achieve interesting effects based on the acoustic combination of distinct pitches in space. A good example of the potential of such arrangement was demonstrated in one of OSCILLA’s first appearances (Kernel Festival, Milan, IT, 2011) where the interactive surface was projected onto the marble altar of a small church and four loudspeakers were placed in a choir-like semicircular fashion in the apse (Figures 3-5).

In this specific case the context also determined the use of electronic candles and the subtitle of the installation ‘Modern Rituals’, meant as a playful provocation to stimulate thoughts on our relationship with technology in an accelerated world. In the Latin tradition, incidentally, the term ‘oscilla’ was used to designate small votive objects decorated with faces or masks that were hung during rituals and would swing in the wind (hence the term oscillation).

The programming of OSCILLA went through various development stages and I have created various versions to this date, mostly working with Pure Data, Max MSP (Jitter), Quartz Composer and Isadora.

Since the first versions in the spring of 2011 OSCILLA has been presented at various festivals and art galleries in Europe in various forms including small tabletop versions, a self-contained model with rear projection and touch-screen mode, medium size ‘floor versions’ and eight meter-wide ‘stage versions’ so musicians can actually interact while performing.

A short documentary of some of these embodiments can be found online at www.ubikteatro.com following the OSCILLA links. The project is produced and distributed by UBIKteatro (Venice) with PHILIPS as technical partner.

Author: Andrea Santini

Andrea Santini is a digital media artist, lecturer and researcher in the fields of electroacoustic and spatial music, sound art, new media and audio-visual interaction. After his SAE London degree (Audio) he has completed an MA in Sonic Arts at Middlesex University, and then obtained a D.E.L. bursary for a PhD on spatial audio and live electronics at the Sonic Arts Research Centre (Belfast) focusing on the spatial music of Venetian composer Luigi Nono.

In late 2011 Andrea rejoined SAE Institute (Ljubljana) where he works as a lecturer and campus academic coordinator for the Audio Production Degree.

Since 2007, as technical director of creative research group UBIKteatro (Venice), Andrea has been focusing on performance and installation projects that incorporate live signal processing, gestural control and video mapping, audiovisual interaction, reactive and generative systems. More details can be found at www.ubikteatro.comn

1 Comment

Oscilla is a great project. I would love to see this go global.